CS-Bench

CS-Bench

Computer Science (CS) stands as a testament to the intricacies of human intelligence, profoundly advancing the development of artificial intelligence and modern society. However, the current community of large language models (LLMs) overly focuses on benchmarks for analyzing specific foundational skills (e.g. mathematics and code generation), neglecting an all-round evaluation of the computer science field. To bridge this gap, we introduce CS-Bench, the first bilingual (Chinese-English) benchmark dedicated to evaluating the performance of LLMs in computer science. CS-Bench comprises approximately 5K meticulously curated test samples, covering 26 subfields across 4 key areas of computer science, encompassing various task forms and divisions of knowledge and reasoning. Utilizing CS-Bench, we conduct a comprehensive evaluation of over 30 mainstream LLMs, revealing the relationship between CS performance and model scales. We also quantitatively analyze the reasons for failures in existing LLMs and highlight directions for improvements, including knowledge supplementation and CS-specific reasoning. Further cross-capability experiments show a high correlation between LLMs' capabilities in computer science and their abilities in mathematics and coding. Moreover, expert LLMs specialized in mathematics and coding also demonstrate strong performances in several CS subfields. Looking ahead, we envision CS-Bench serving as a cornerstone for LLM applications in the CS field and paving new avenues in assessing LLMs' diverse reasoning capabilities.

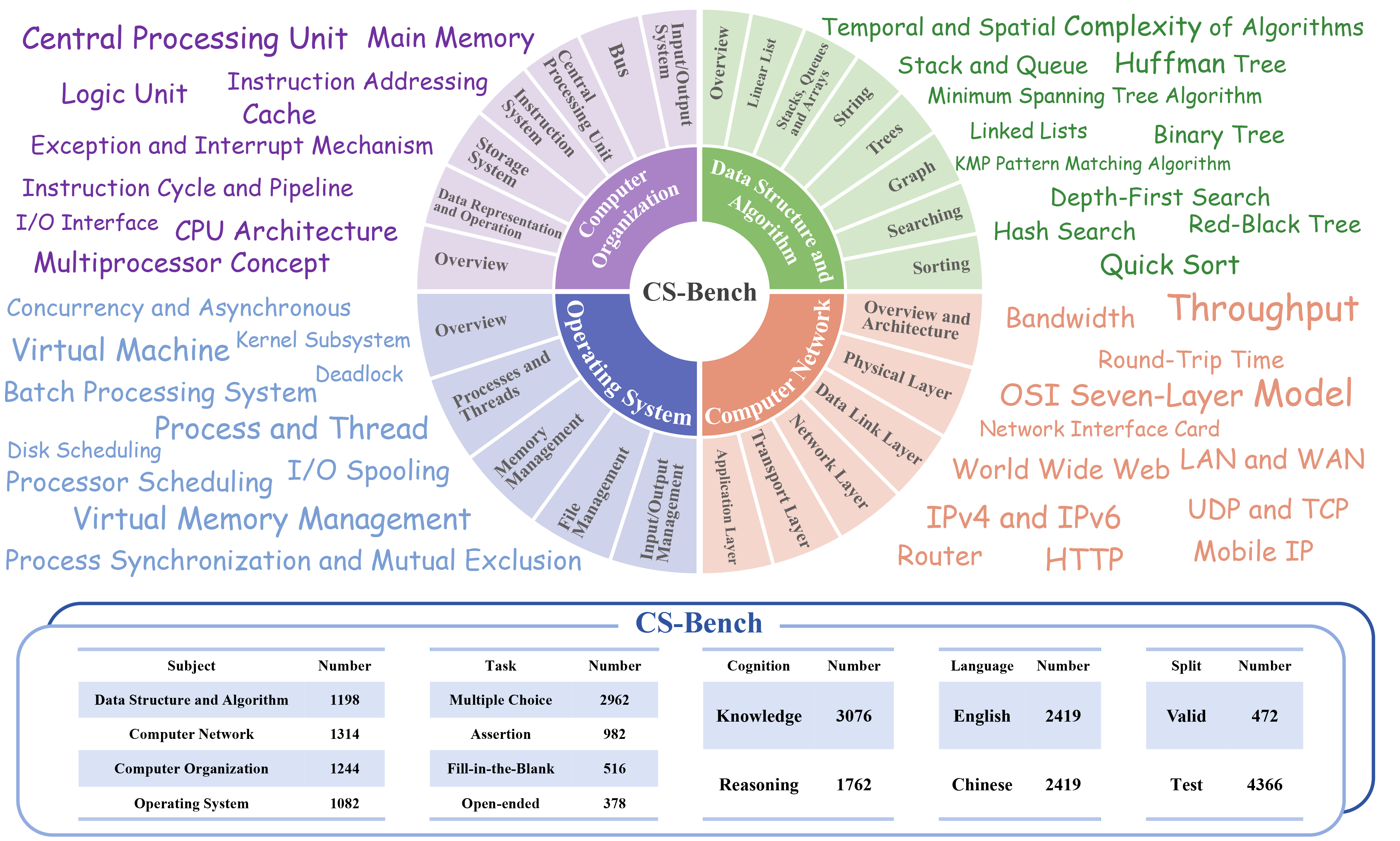

Overview diagram and statistics of

CS-Bench.

CS-Bench.

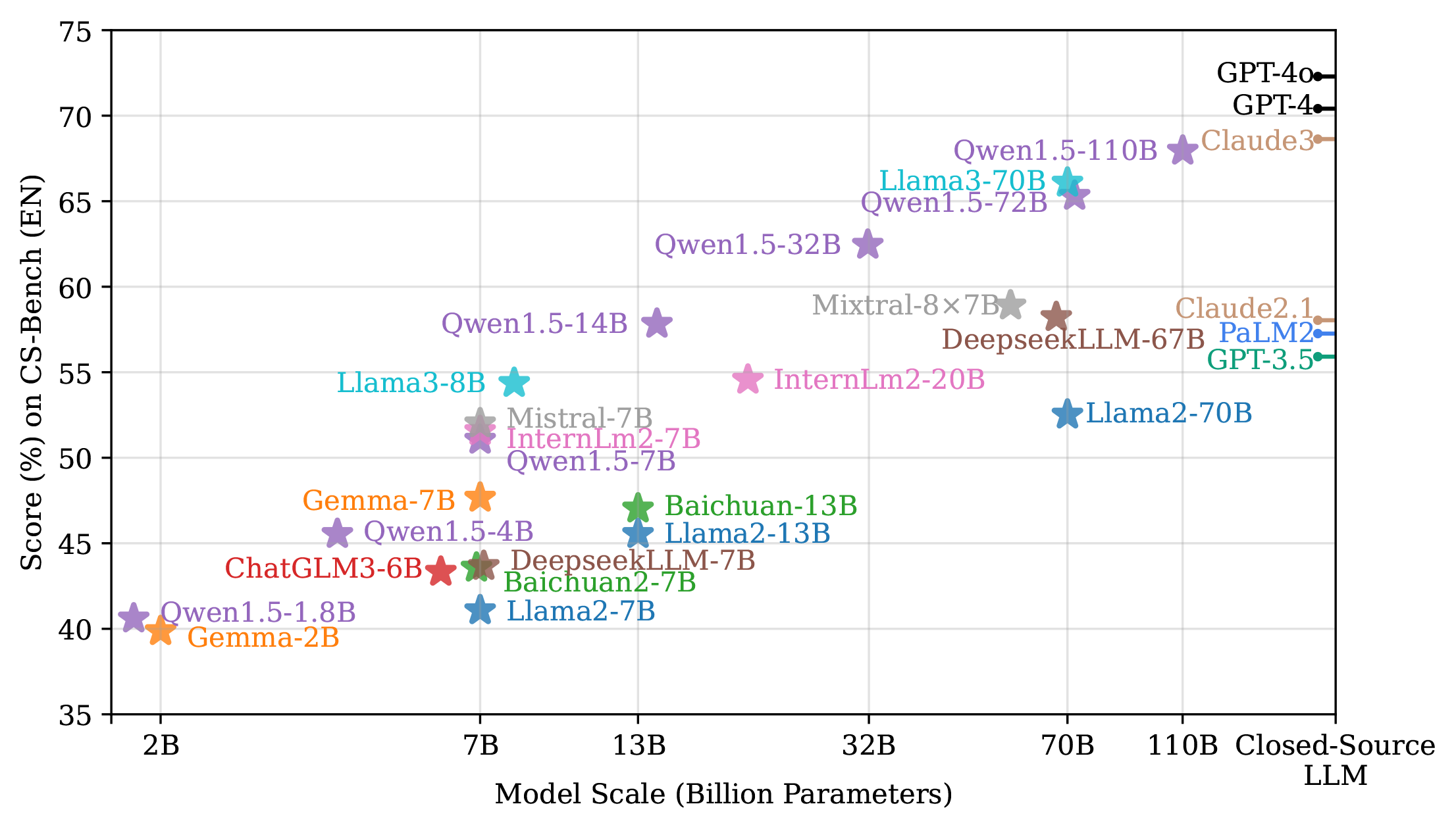

The leaderboard of LLMs on

CS-Bench (EN).

CS-Bench (EN).

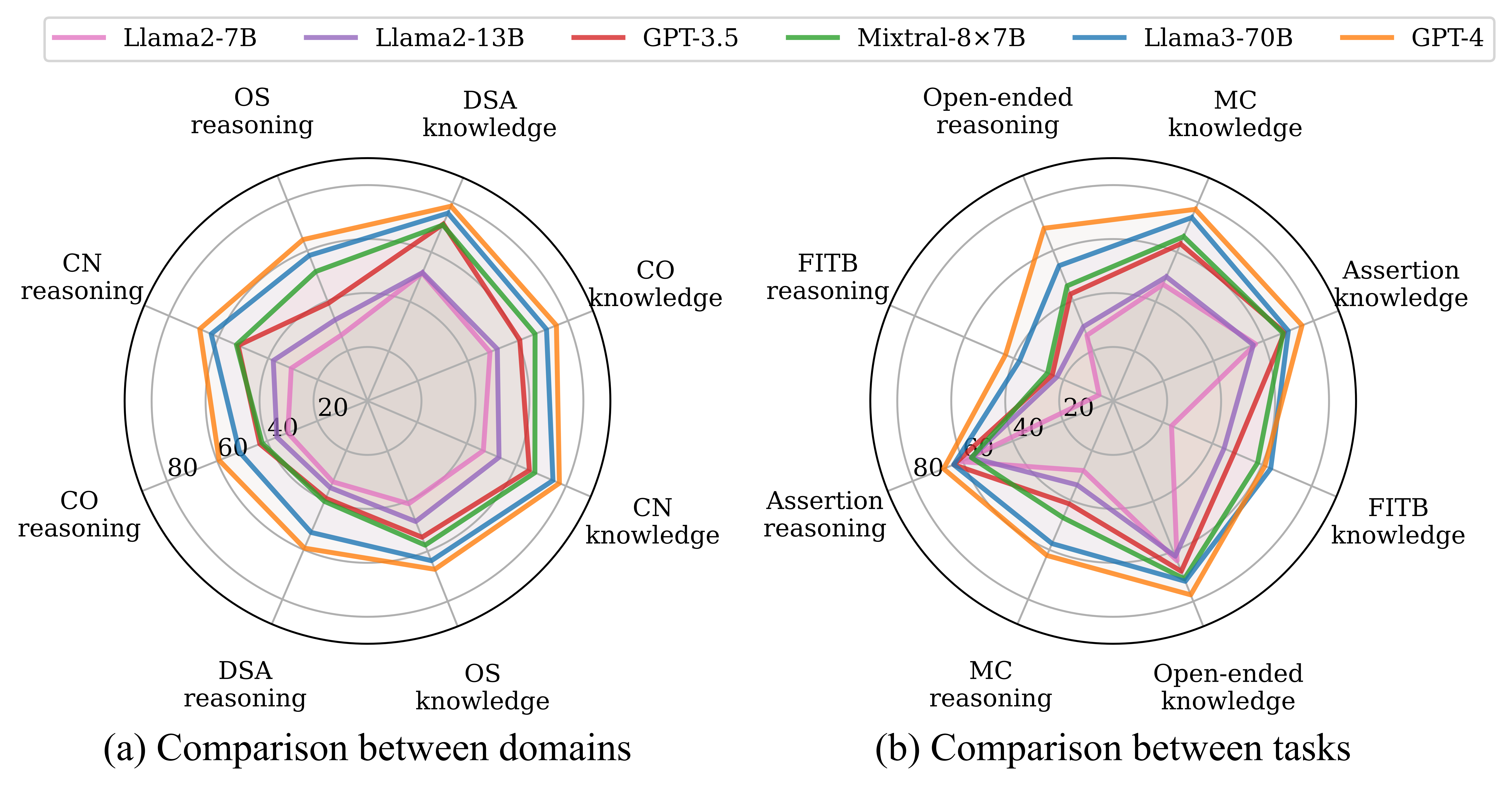

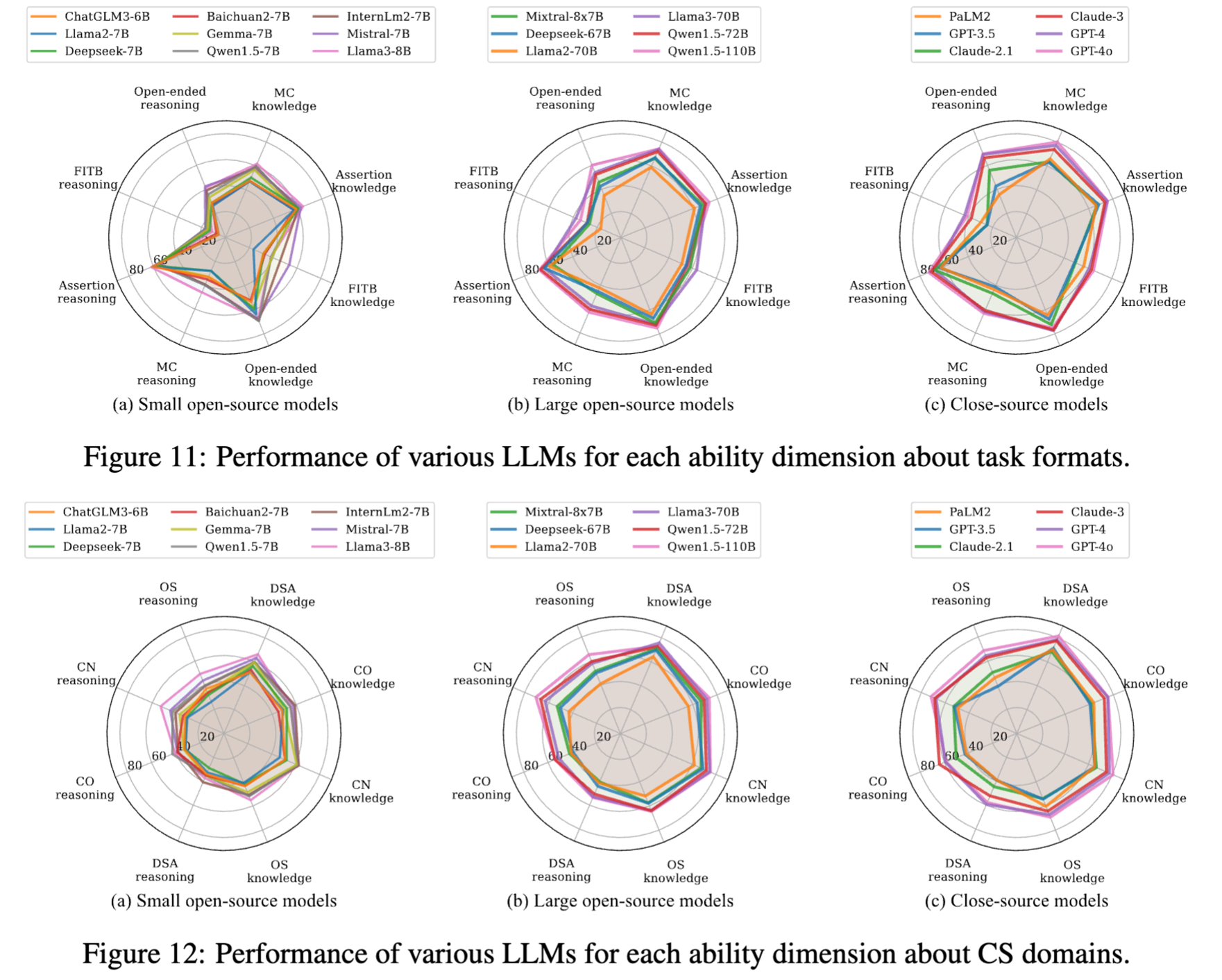

Comparison of representative LLMs' scores across different domains and tasks on

CS-Bench (EN).

CS-Bench (EN).

CS-Bench (EN)

CS-Bench (EN)

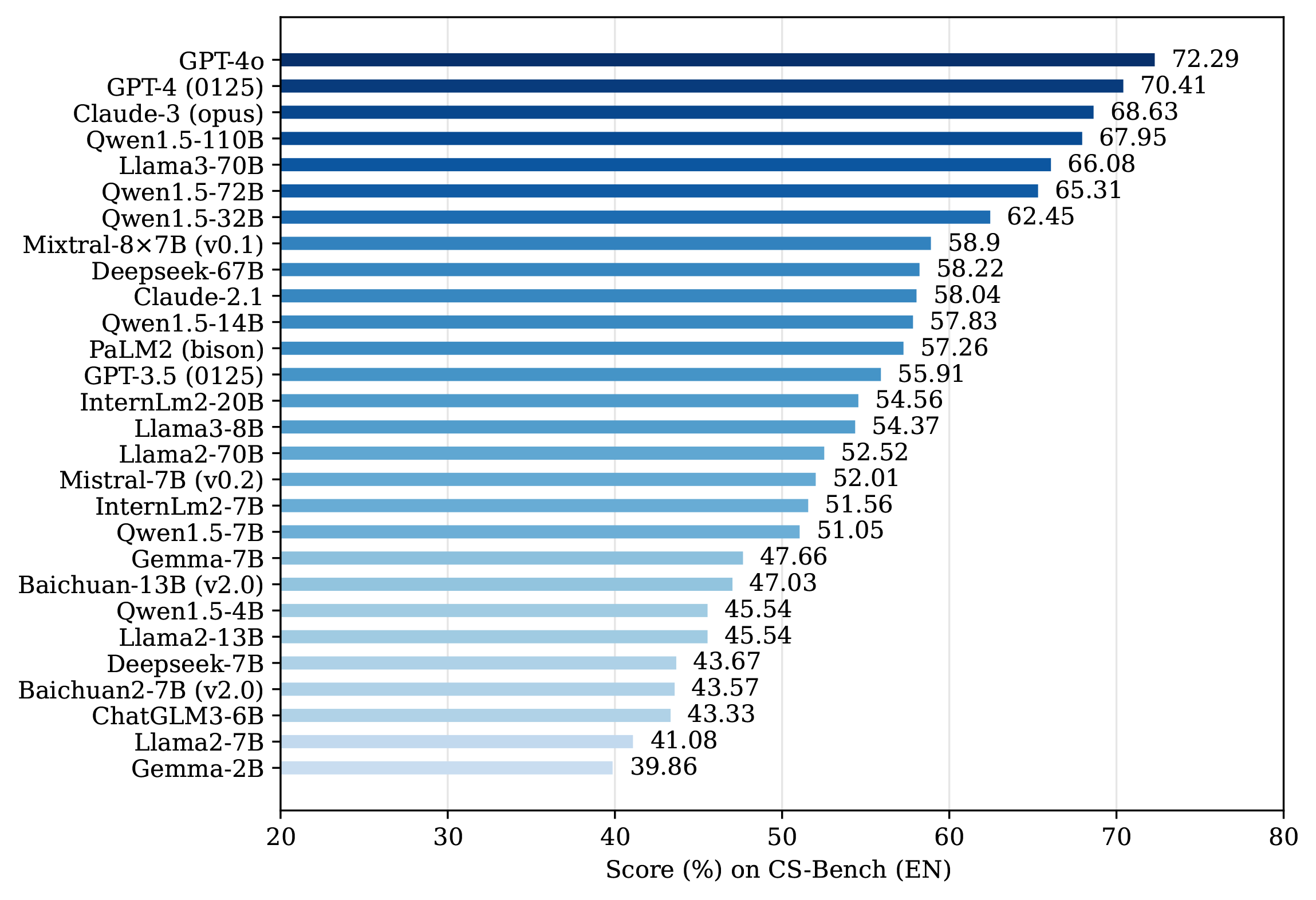

The leaderboard of LLMs on

CS-Bench (EN).

CS-Bench (EN).

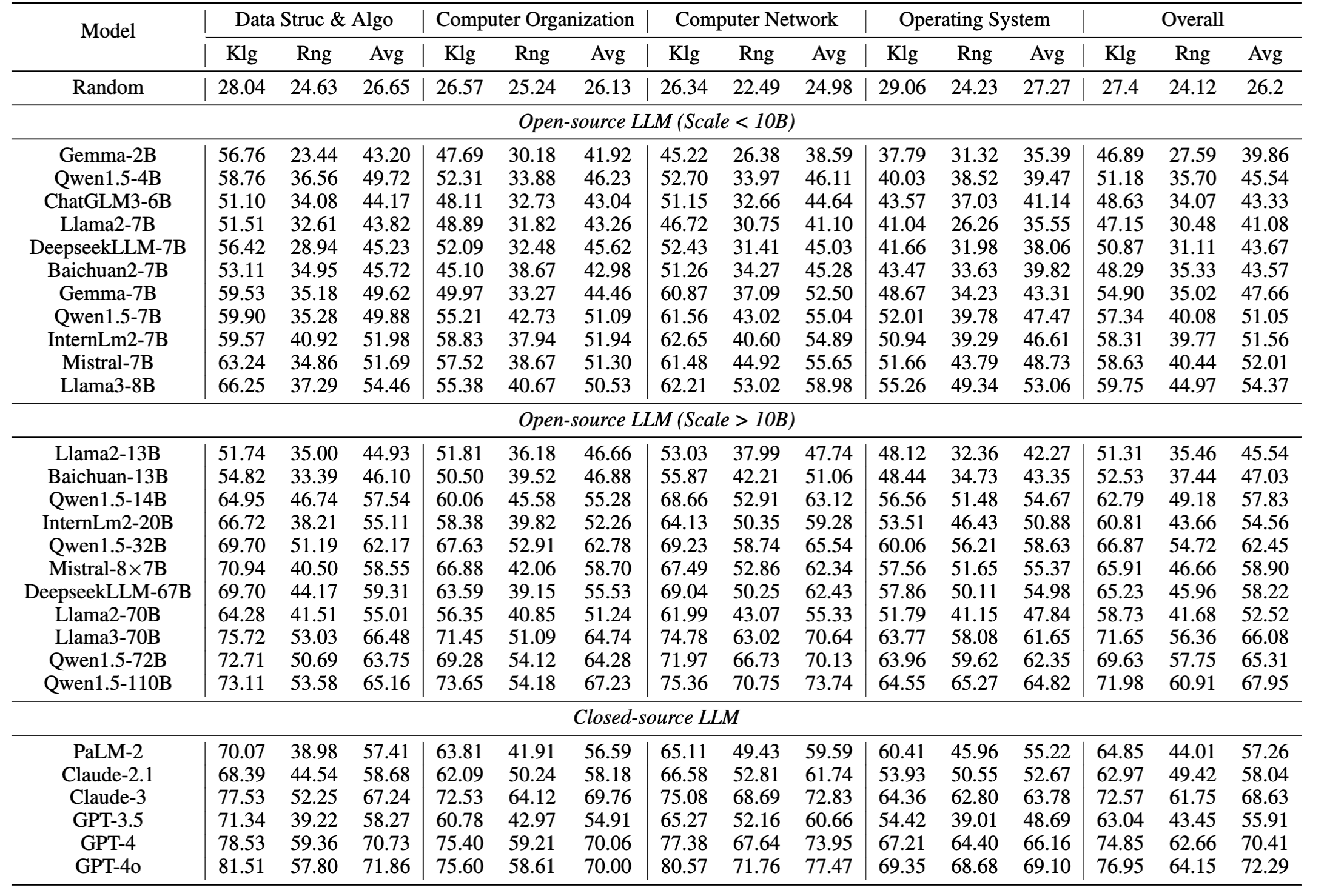

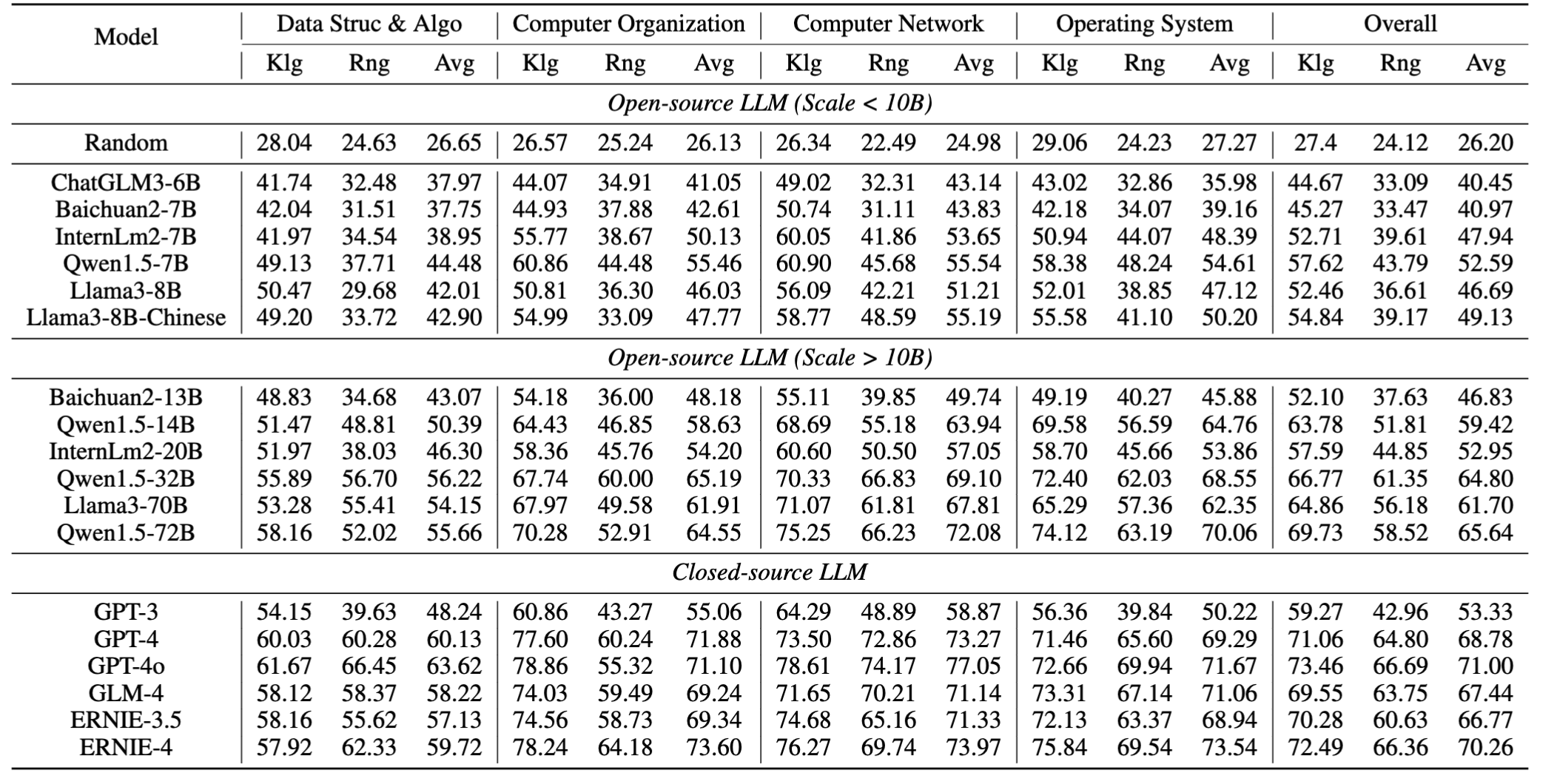

Zero-shot scores (%) of LLMs across domains on

CS-Bench (EN), where "Klg" denotes knowledge-type,

CS-Bench (EN), where "Klg" denotes knowledge-type,

"Rng" denotes reasoning-type, and "Avg" denotes Average.

The random scores are weighted as follows:

25% for multiple-choice(MC), 50% for Assertion, 0% for fill-in-the-blank(FITB), and 10% for Open-ended.

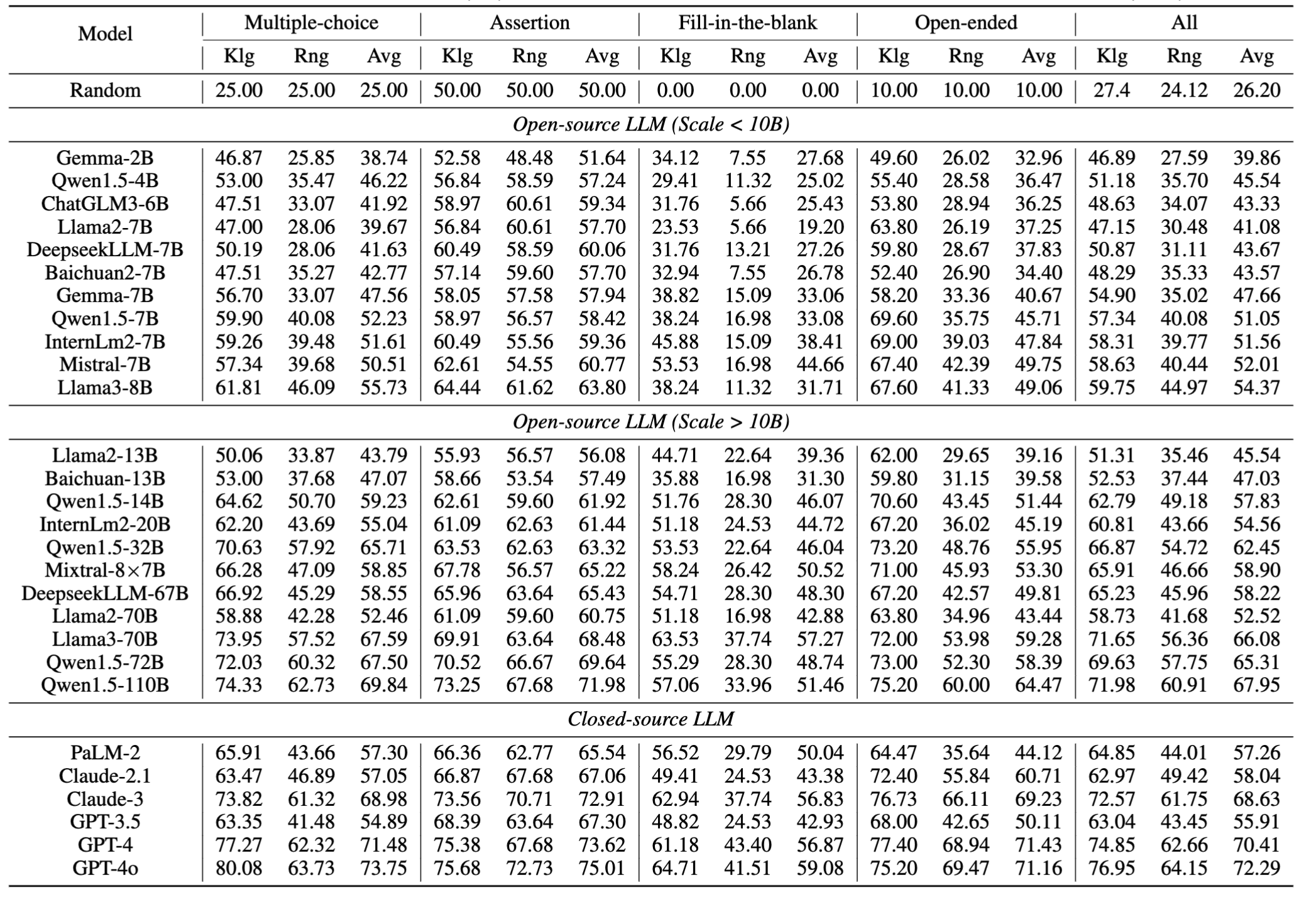

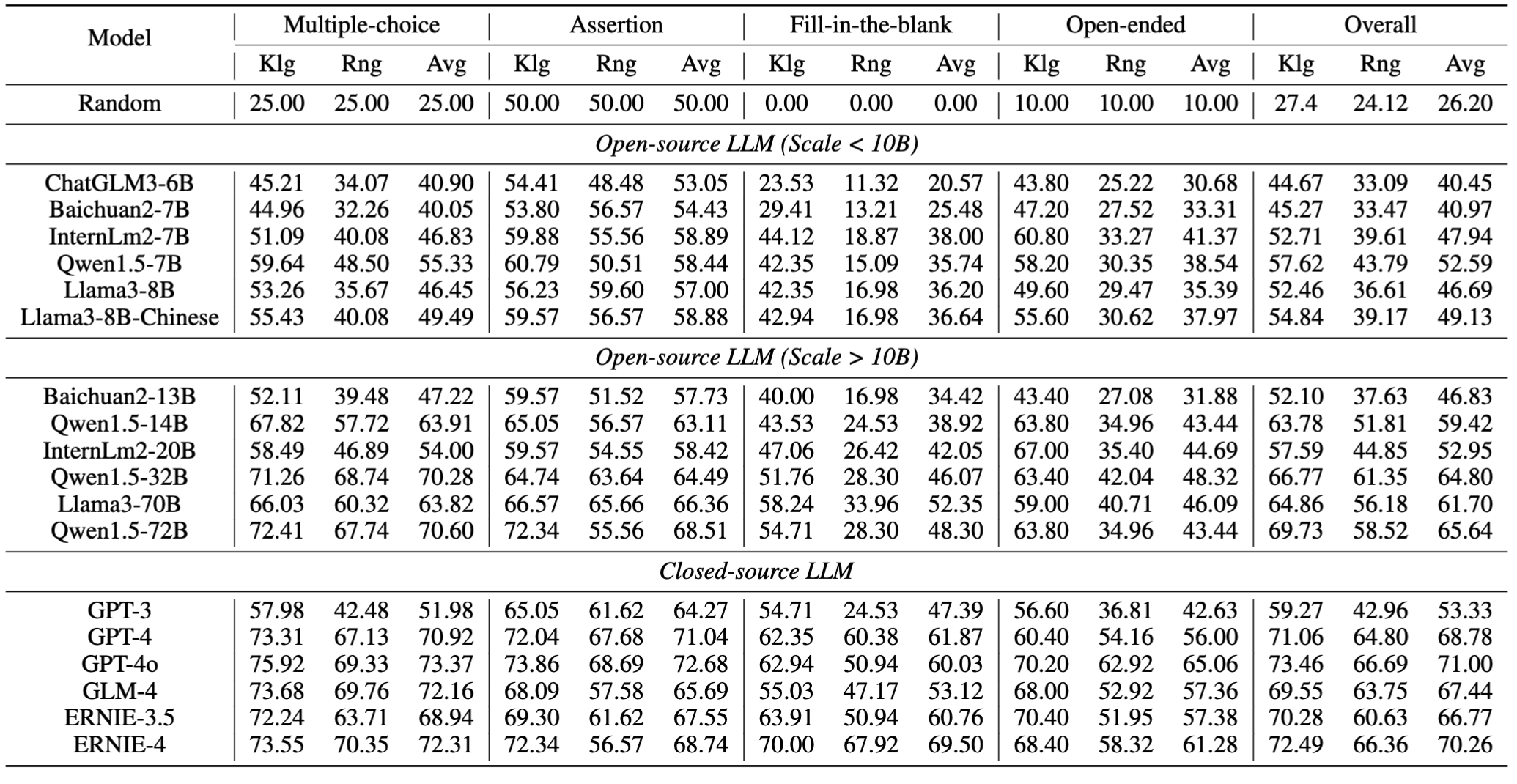

Zero-shot scores (%) of LLMs across task formats on

CS-Bench (EN).

CS-Bench (EN).

CS-Bench (CN)

CS-Bench (CN)

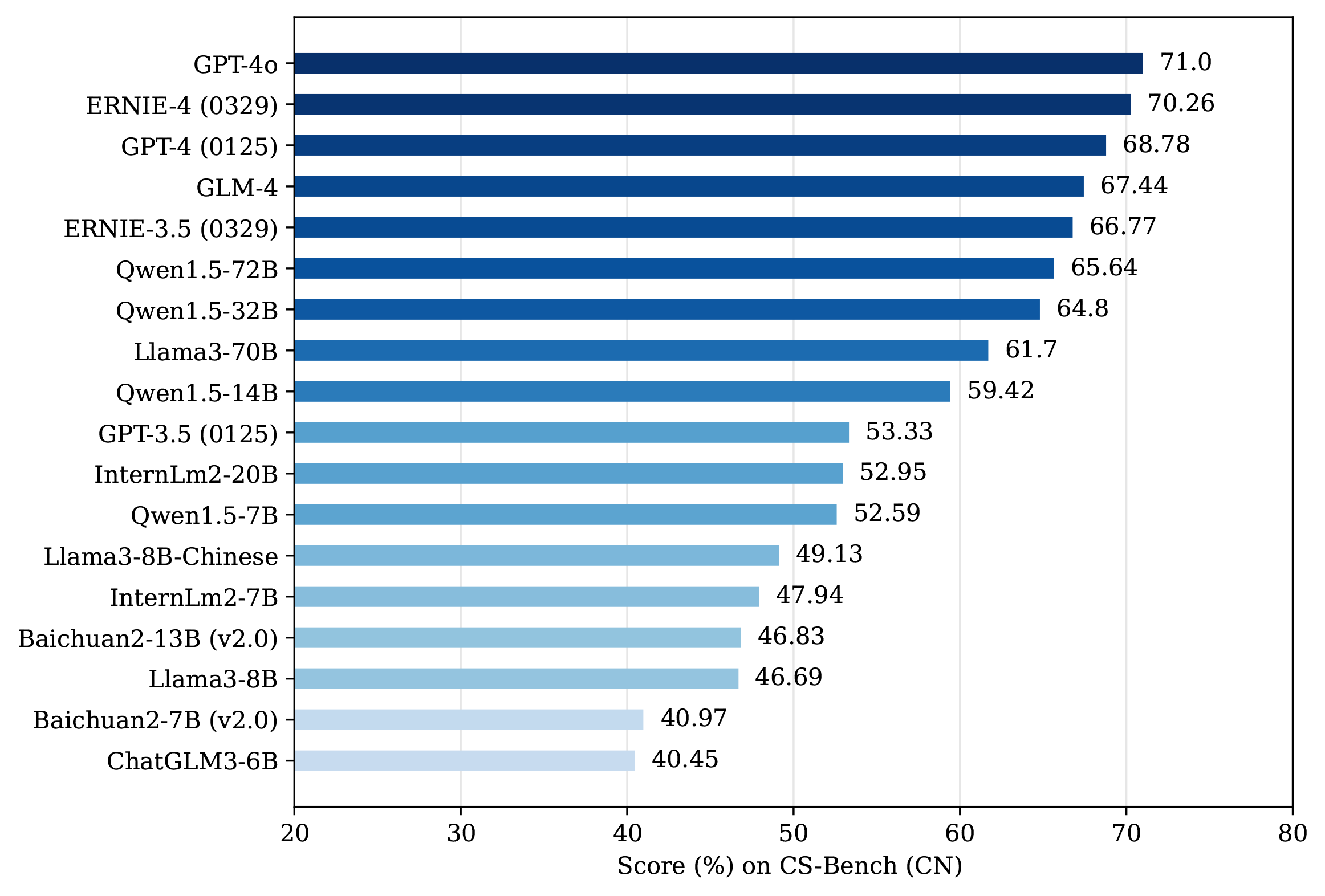

The leaderboard of LLMs on

CS-Bench (CN).

CS-Bench (CN).

Zero-shot scores (%) of LLMs across domains on

CS-Bench (CN), where "Klg" denotes knowledge-type,

CS-Bench (CN), where "Klg" denotes knowledge-type,

"Rng" denotes reasoning-type, and "Avg" denotes Average.

The random scores are weighted as follows:

25% for multiple-choice(MC), 50% for Assertion, 0% for fill-in-the-blank(FITB), and 10% for Open-ended.

Zero-shot scores (%) of LLMs across task formats on

CS-Bench (CN).

CS-Bench (CN).

🚨 To submit your results to the leaderboard, please send to this email with your result json files.

CS-Bench Dataset

CS-Bench Dataset

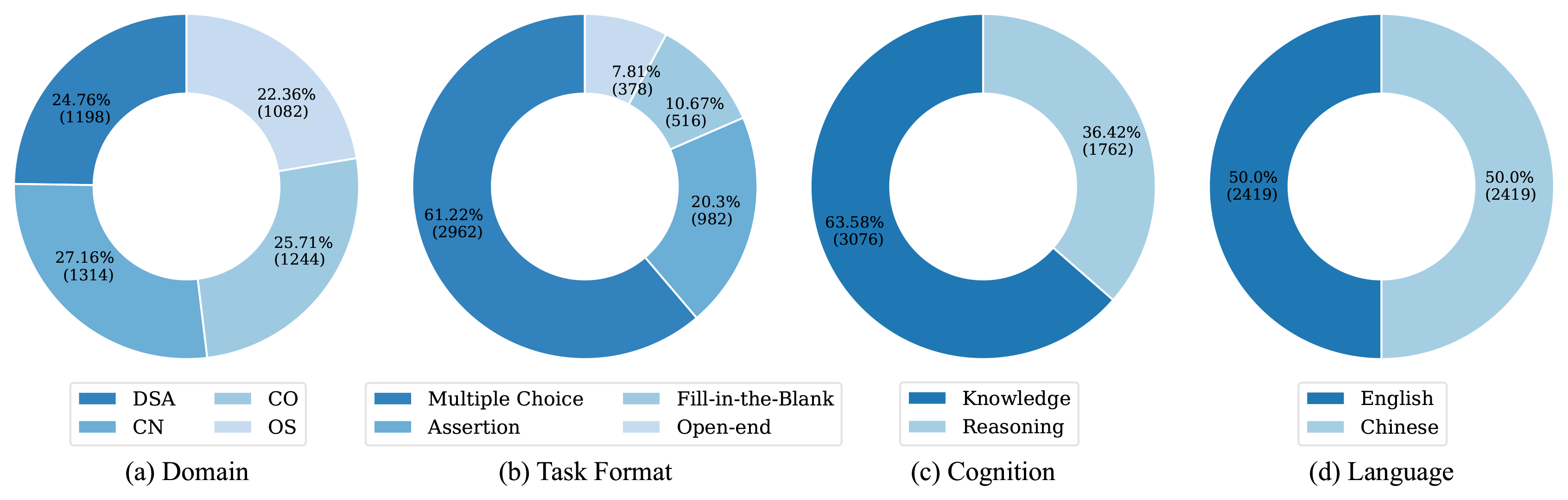

CS-Bench is the first benchmark dedicated to

evaluating the performance of LLMs in the field of computer science. CS-Bench

supports bilingual assessment, encompassing a total of 26 subfields across 4 domains,

with a cumulative total of 4838 samples. These samples encompass various task formats including multiple-choice,

assertion, fill-in-the-blank,

and open-ended questions. Besides, CS-Bench assesses both knowledge-type and higher-order reasoning-type questions,

with each reasoning question accompanied by an explanation.

To validate the effectiveness of models, we randomly sample 10% of the data for validation, using the remaining 90% for testing.

CS-Bench is the first benchmark dedicated to

evaluating the performance of LLMs in the field of computer science. CS-Bench

supports bilingual assessment, encompassing a total of 26 subfields across 4 domains,

with a cumulative total of 4838 samples. These samples encompass various task formats including multiple-choice,

assertion, fill-in-the-blank,

and open-ended questions. Besides, CS-Bench assesses both knowledge-type and higher-order reasoning-type questions,

with each reasoning question accompanied by an explanation.

To validate the effectiveness of models, we randomly sample 10% of the data for validation, using the remaining 90% for testing.

The quantity and proportion of each type in different dimensions on

CS-Bench .

CS-Bench .

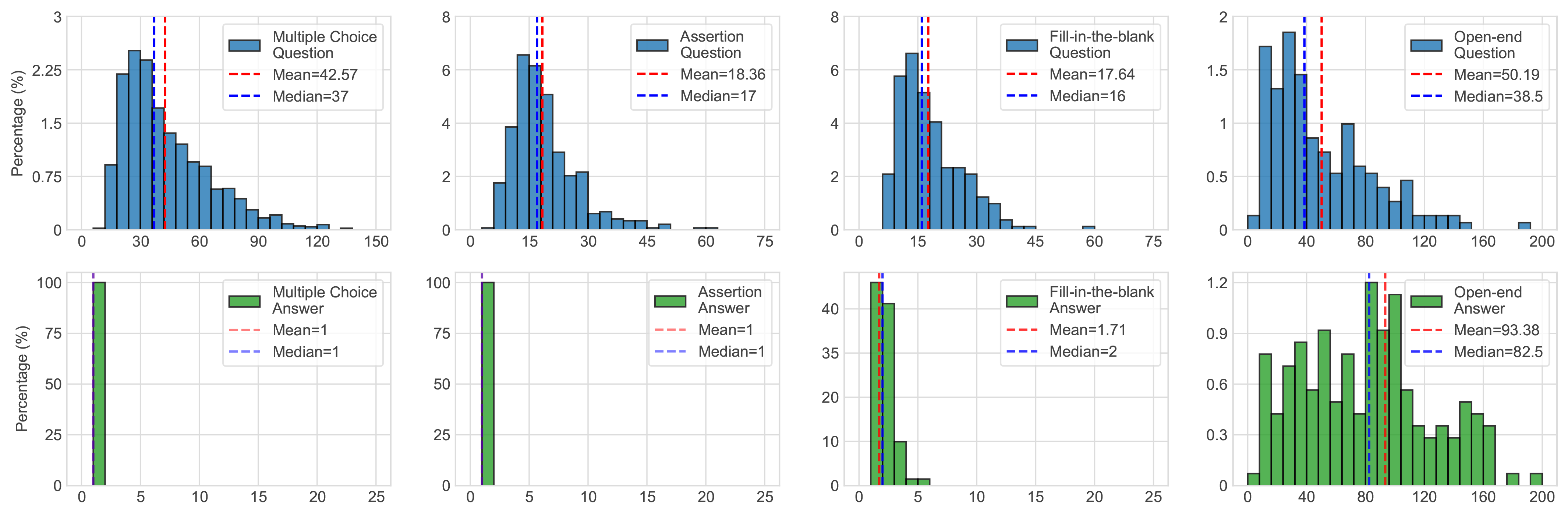

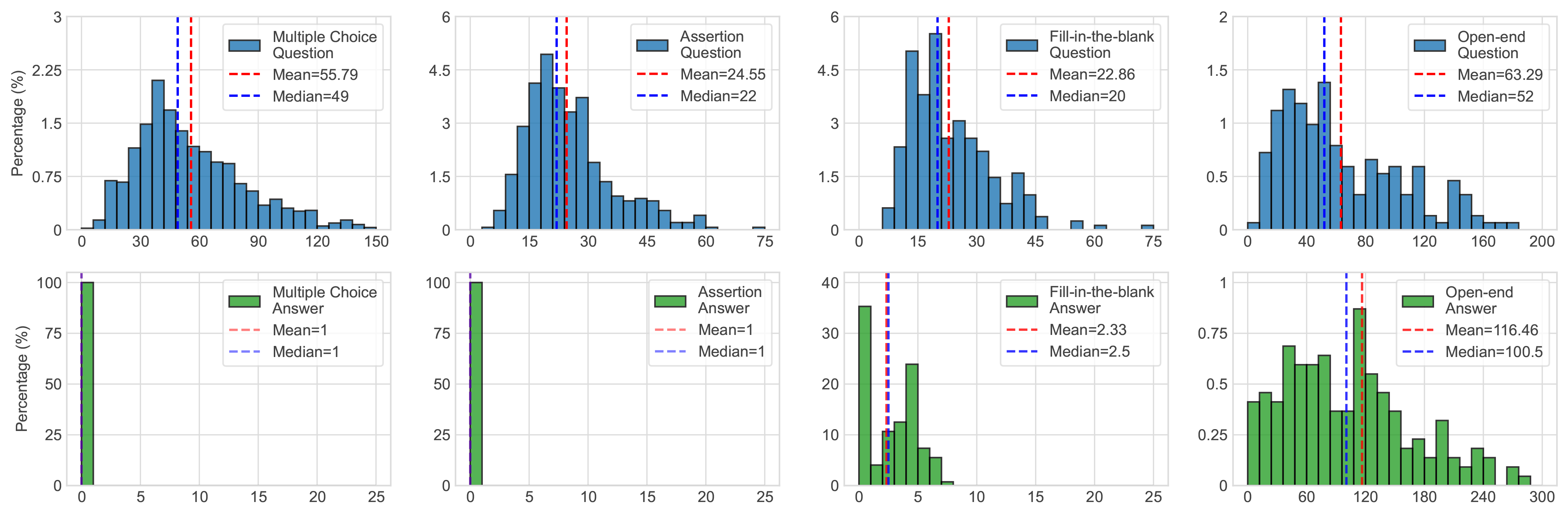

Question and answer lengths of each task format on

CS-Bench (EN),

CS-Bench (EN),

Question and answer lengths of each task format on

CS-Bench (CN).

CS-Bench (CN).

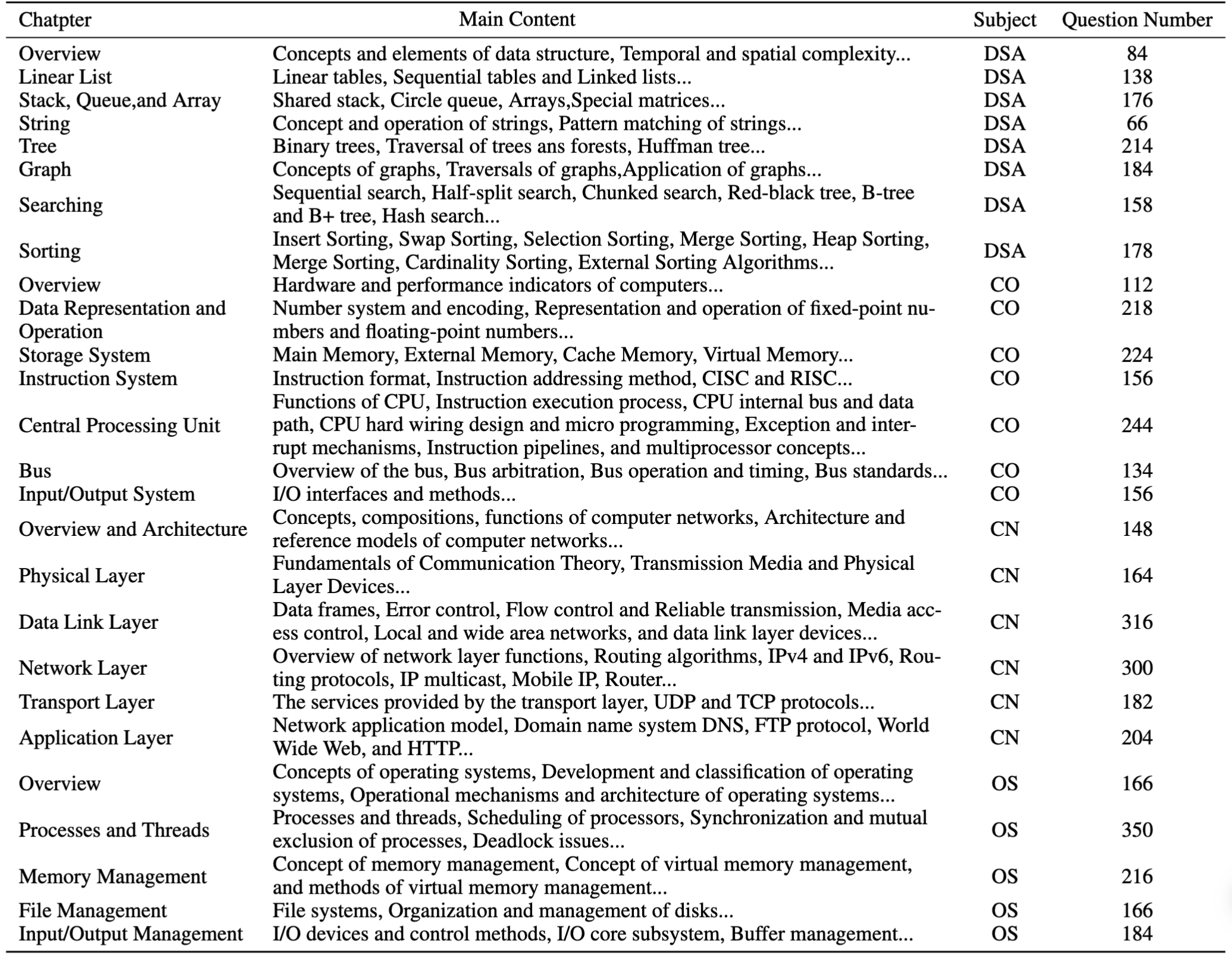

Summary of 26 fine-grained subfields of

CS-Bench.

CS-Bench.

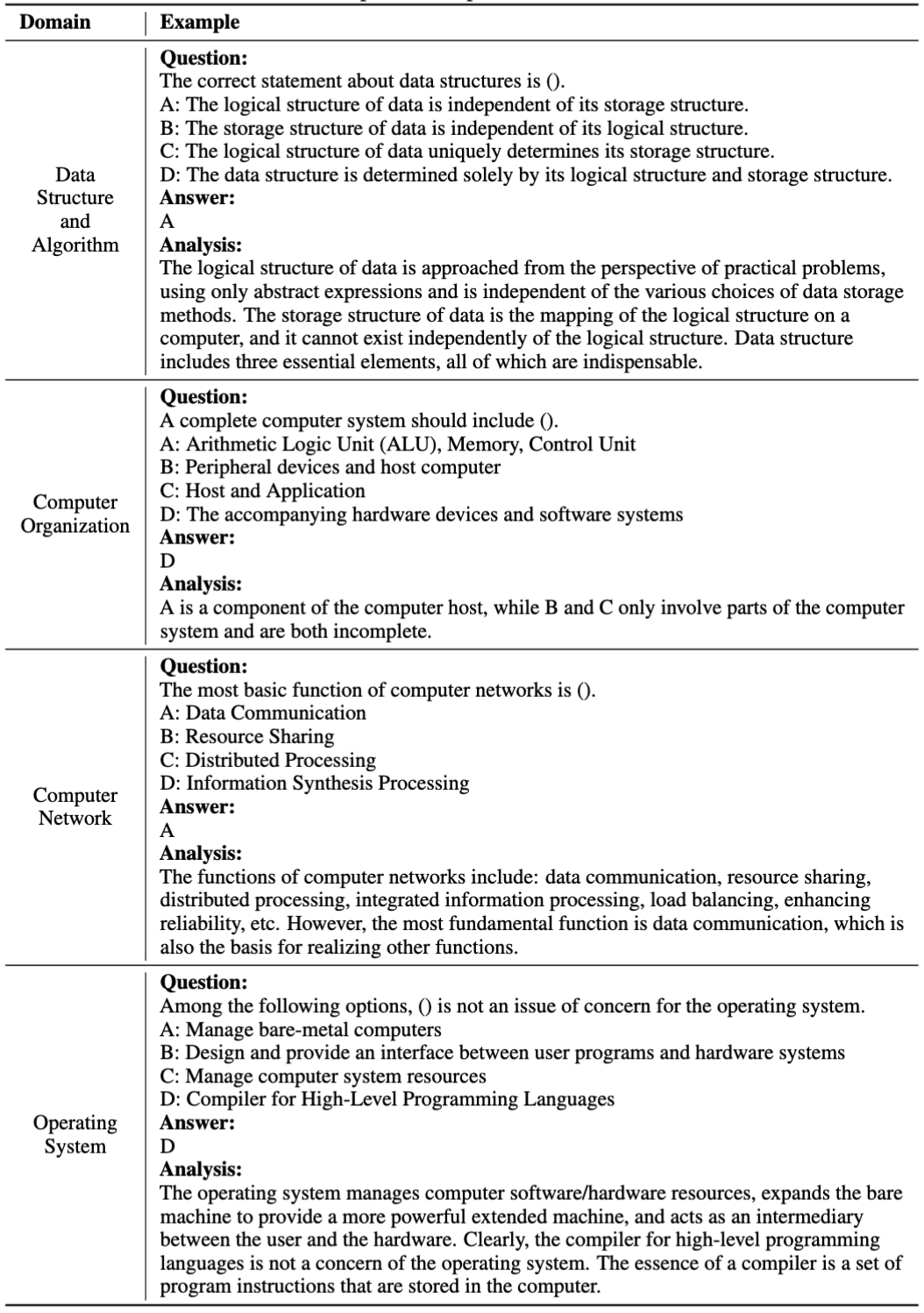

Examples of samples in different domains.

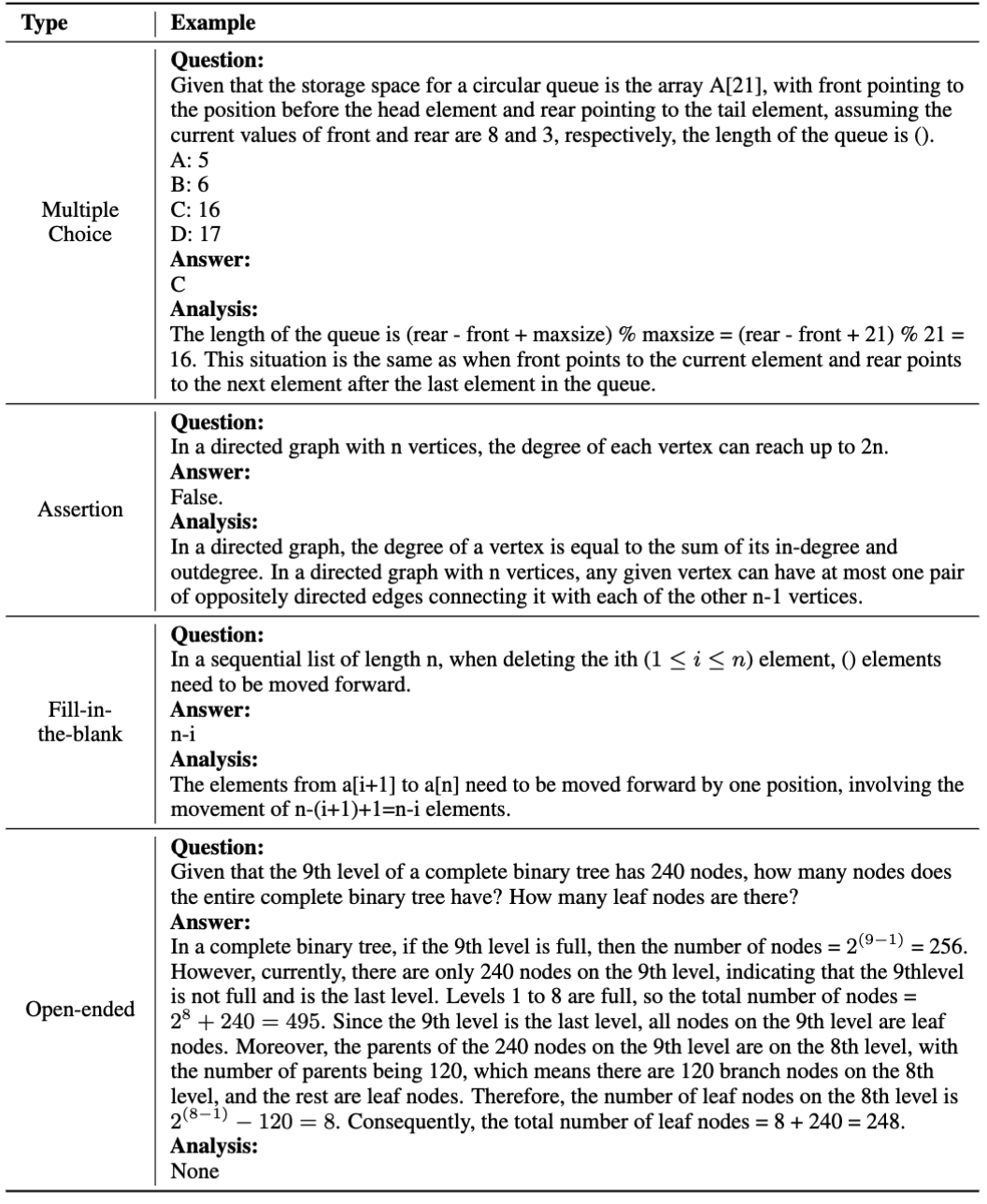

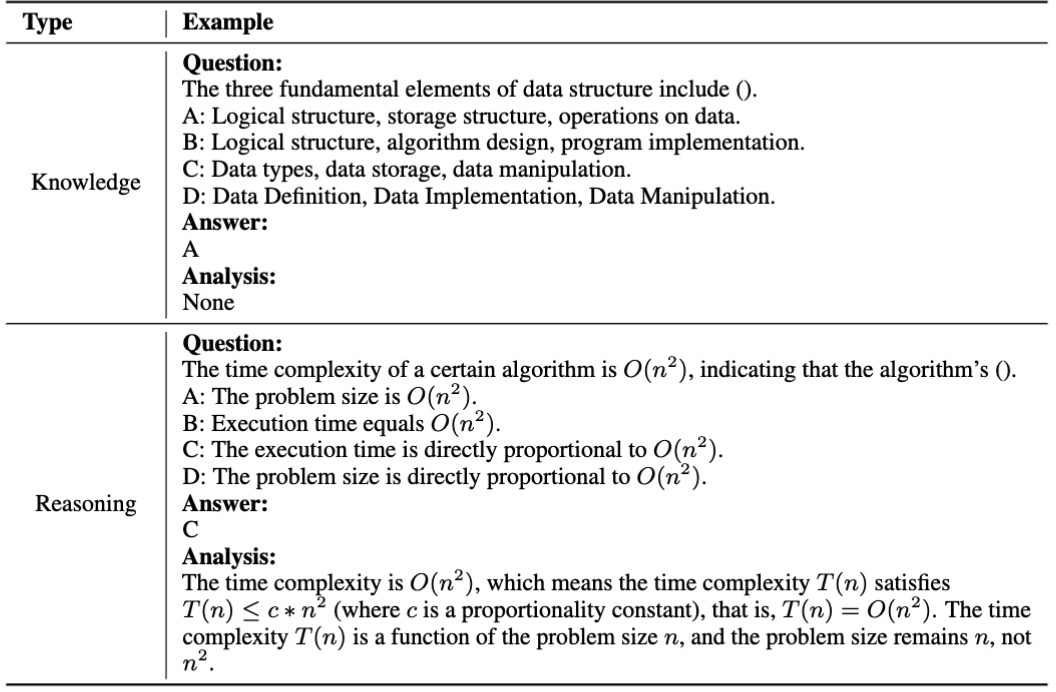

Examples of different task formats.

Examples of knowledge-type and reasoning-type.

Examples of different languages.

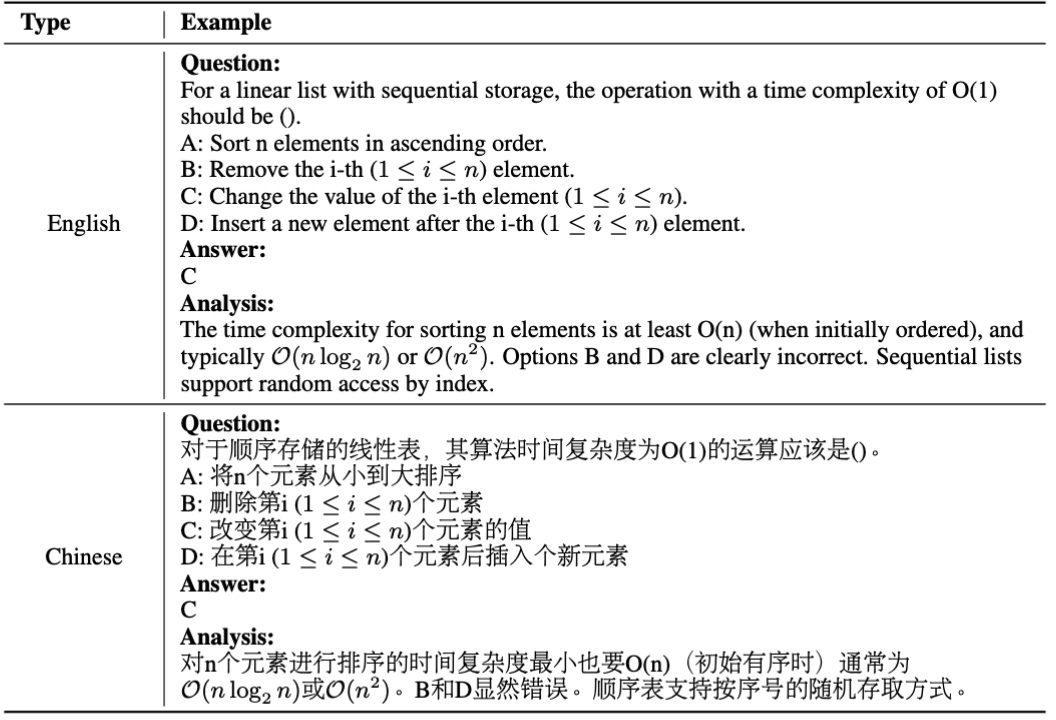

The performance of LLMs at different parameter scales(Left).

The scale-score fitting curve of Qwen1.5 and Llama2 series(Right).

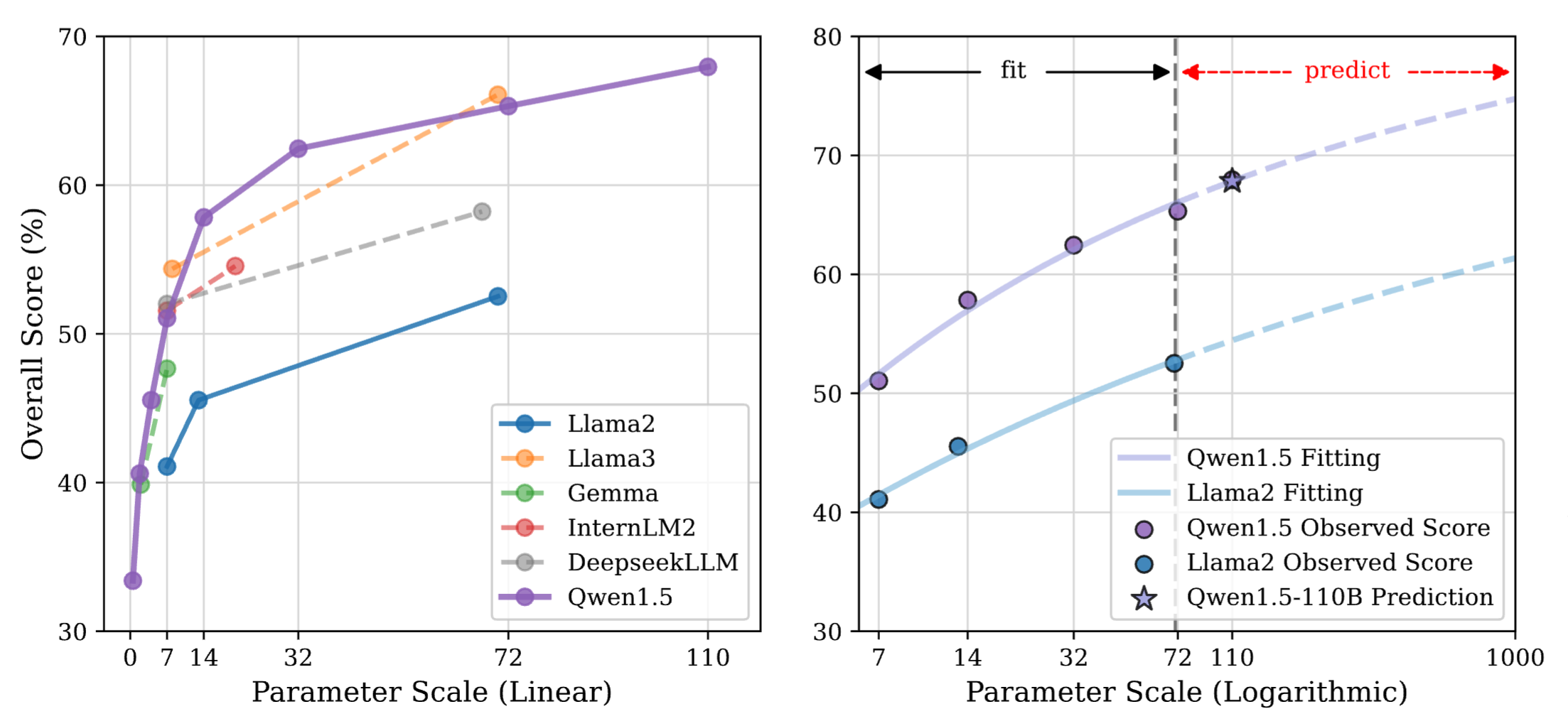

Comparison of models under different settings.

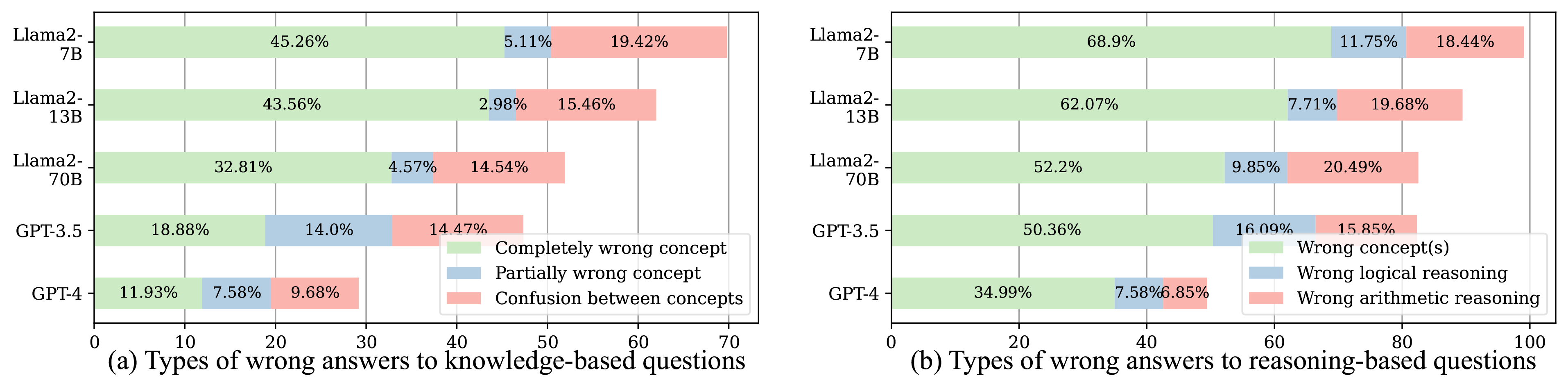

The proportion of different error types varies by models for multiple-choice questions.

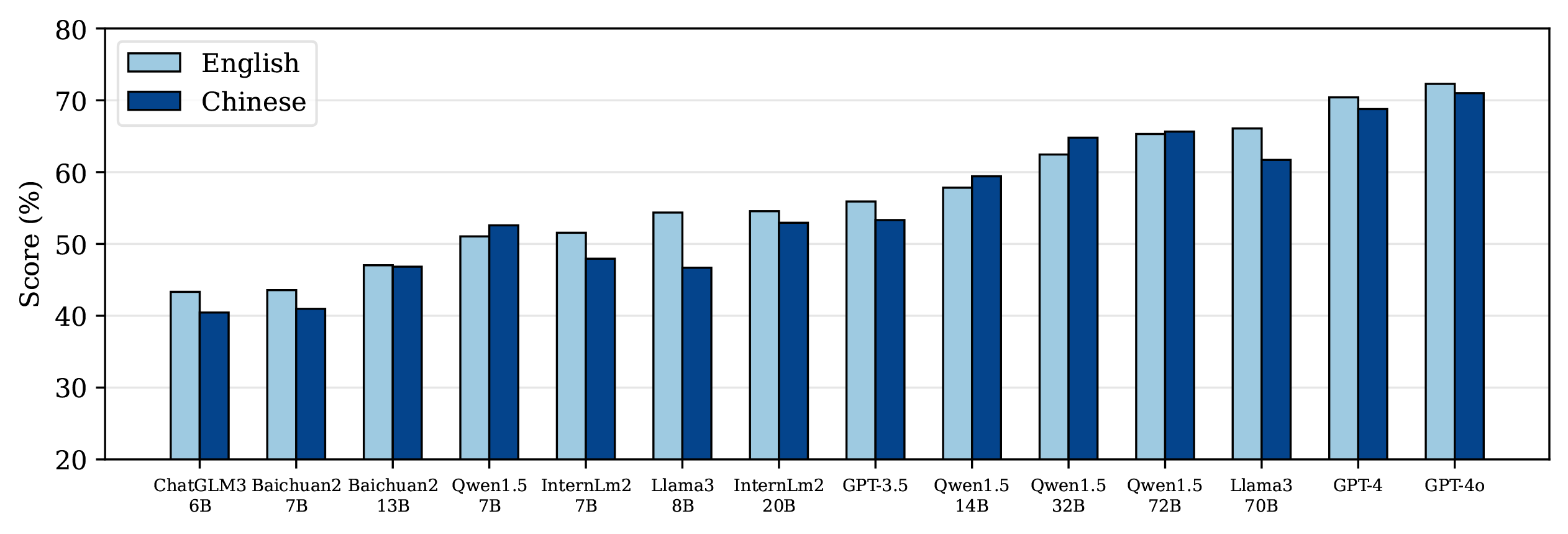

Comparison of models in different languages on CS-Bench.

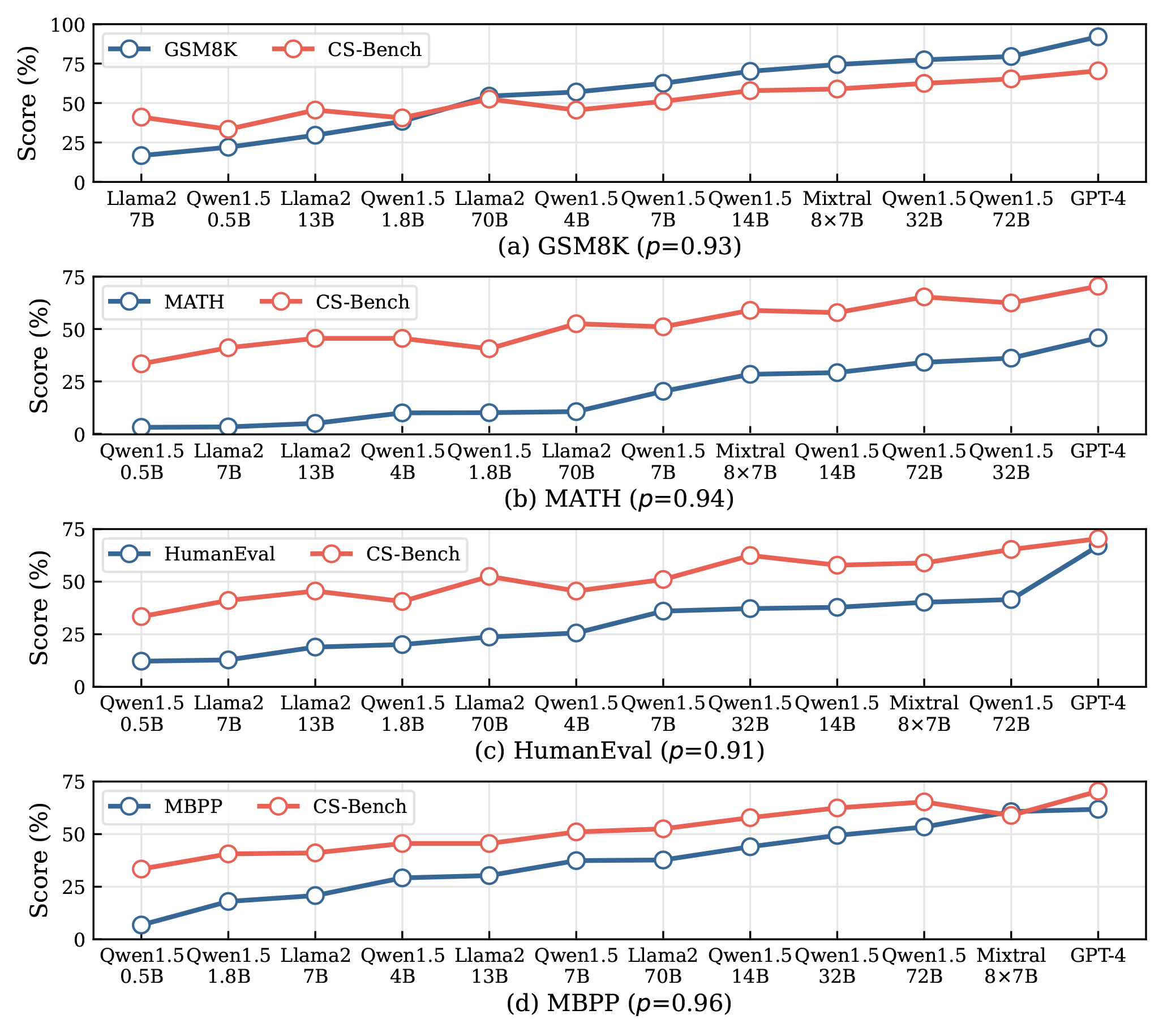

The score changes on CS-Bench as LLM's Math/Code score increases.

𝒫 denotes Pearson correlation coefficient.

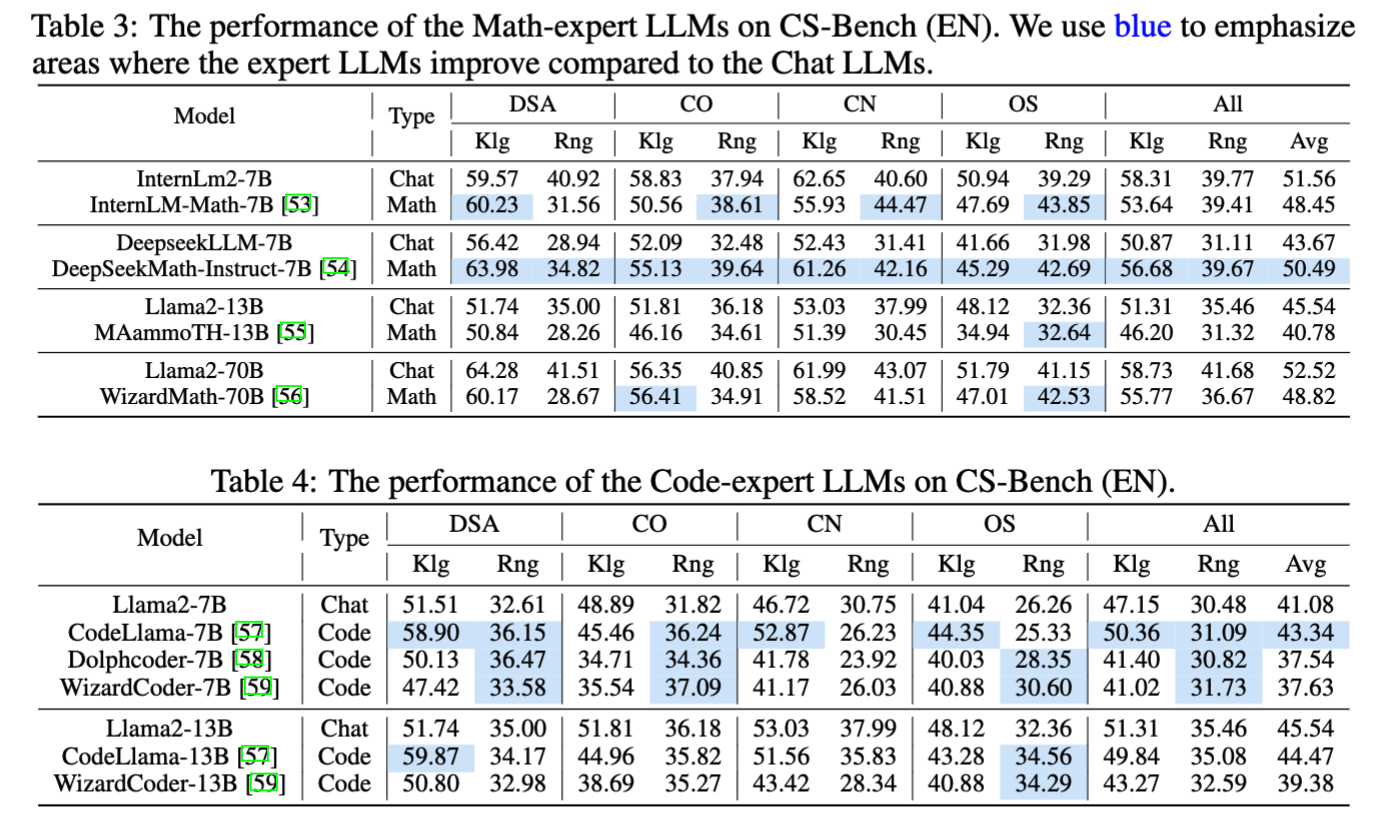

The performance of code- and math-specific expert LLMs on

CS-Bench

CS-Bench

@article{song2024cs,

title={CS-Bench: A Comprehensive Benchmark for Large Language Models towards Computer Science Mastery},

author={Song, Xiaoshuai and Diao, Muxi and Dong, Guanting and Wang, Zhengyang and Fu, Yujia and Qiao, Runqi and Wang, Zhexu and Fu, Dayuan and Wu, Huangxuan and Liang, Bin and others},

journal={arXiv preprint arXiv:2406.08587},

year={2024}

}